Reading Time: 5 minutes

Reading Time: 5 minutes

Status: Final Blueprint

Author: Shahab Al Yamin Chawdhury

Organization: Principal Architect & Consultant Group

Research Date: September 27, 2022

Location: Dhaka, Bangladesh

Version: 1.0

I. Executive Summary

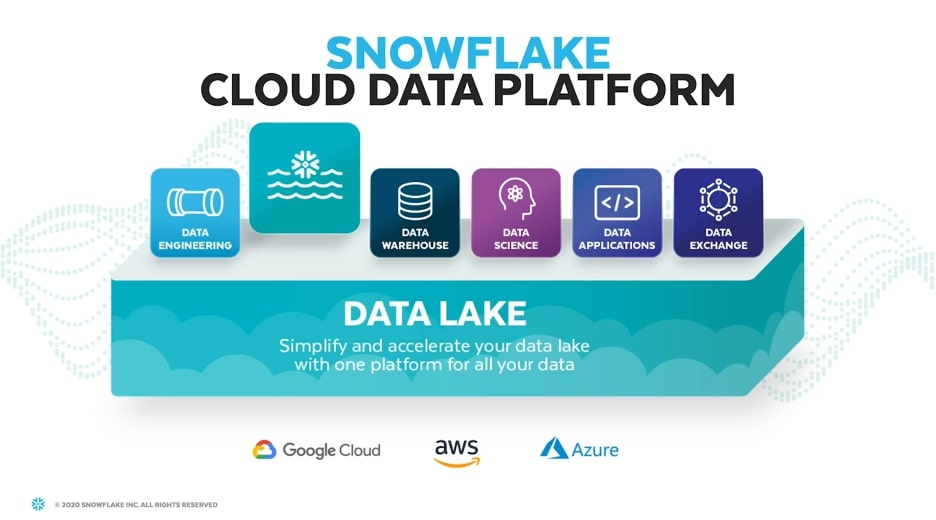

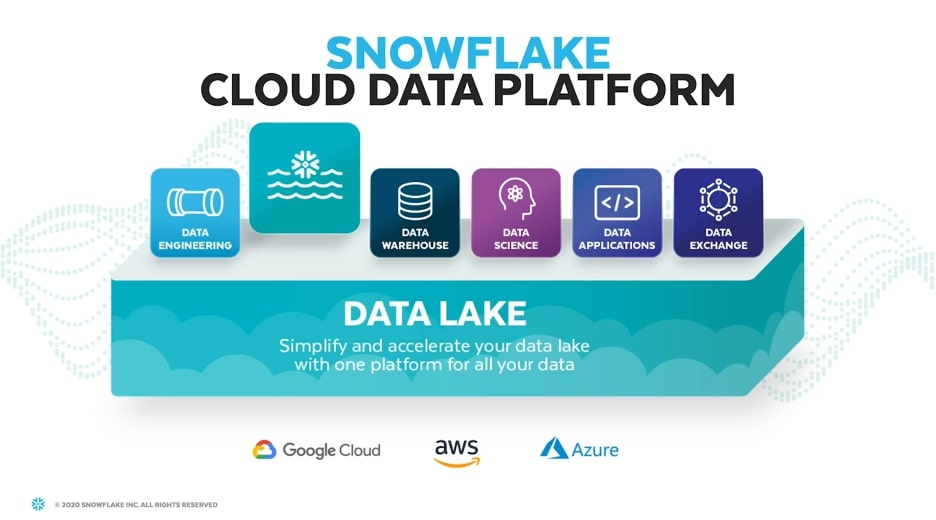

This guide explores Snowflake’s role as a unified data platform, bridging traditional data warehouses and flexible data lakes. Its cloud-native architecture, with decoupled storage and compute, offers unparalleled scalability, performance, and cost efficiency. Key features like auto-scaling, real-time ingestion, and zero-copy cloning streamline operations. Quantifiable benefits include a 354% ROI for AI deployments and significant efficiency gains (40% query efficiency, 75% runtime reduction). Snowflake’s integrated governance and ML/AI capabilities position it as an “AI Data Cloud,” transforming data into a trusted, auditable asset. Proactive cost optimization is crucial, and its competitive standing offers a powerful general-purpose platform for SQL-centric analytics and governed data sharing.

II. The Evolving Data Landscape and Snowflake’s Position

The modern data landscape is characterized by increasing data volume, velocity, and variety, posing challenges for traditional data management. Traditional data warehouses are rigid (“schema-on-write”), while data lakes (“schema-on-read”) offer flexibility but risk becoming “data swamps” without proper governance. Modern lakehouses, like Snowflake, combine the strengths of both. Enterprises face data silos, quality issues, unpredictable costs, and security hurdles. Snowflake’s unique cloud-native, multi-cloud, hybrid architecture (decoupled storage/compute) provides a “near-zero management” approach, unifying data warehousing and data lake functionalities to simplify the data stack and accelerate insights.

III. Snowflake Data Lake: Core Architecture and Foundational Concepts

Snowflake’s architecture features three independently scalable layers:

- Database Storage Layer: Stores all data in an optimized, compressed, columnar format in cloud object storage, fully managed by Snowflake.

- Query Processing Layer (Virtual Warehouses): Independent Massively Parallel Processing (MPP) compute clusters execute queries, offering auto-scaling and workload isolation.

- Cloud Services Layer: The “brain” managing authentication, metadata, query optimization, and access control, including Query Result Cache and Metadata Cache.

This separation, combined with Micro-Partitioning (automatic data organization for efficient query pruning) and Schema-on-Read flexibility (supporting diverse data types like JSON, Parquet, and unstructured files), ensures high performance and cost efficiency. Multi-layered Caching Mechanisms (Query Result Cache, Metadata Cache, Virtual Warehouse Cache) further boost query performance and reduce compute consumption.

IV. Key Features and Capabilities for Data Lake Operations

Snowflake offers a powerful suite of features for data lake operations:

- Scalability & Elasticity: Auto-scaling, multi-cluster warehouses, and concurrency scaling dynamically adjust compute resources.

- Data Ingestion: Supports batch loading (

COPY INTO), real-time streaming (Snowpipe, Snowpipe Streaming), and API-driven ingestion. - Data Transformation: Leverages the ELT paradigm with SQL-based transformations and Snowpark (enabling Python, Java, Scala code execution directly in Snowflake).

- Data Management & Durability: Includes Zero-Copy Cloning (instant, cost-efficient data copies), Time Travel (access historical data), and Fail-Safe (additional data protection).

- Secure Data Sharing: Enables live, secure data sharing across accounts without data copies, fostering collaboration and monetization.

These capabilities contribute to a “ZeroOps” experience, shifting operational burden to the platform and accelerating time-to-insight.

V. Business Value, ROI, and Efficiency Gains

Snowflake adoption yields significant, quantifiable returns:

- Financial ROI: Estimated 354% ROI over three years for AI deployments on Snowflake (Forrester TEI Study). Early generative AI adopters report an average 41% ROI.

- Revenue Growth: 6% increase in incremental revenue from data-driven innovation; 4.5% reduction in customer churn.

- Operational Efficiency: 40% improvement in query efficiency; up to 75% reduction in runtimes; 35% time savings for data engineers; and significant FTE savings in IT/DBA and analyst roles.

This impact drives faster, more informed decision-making and fosters innovation by freeing up resources for strategic initiatives.

VI. Data Governance, Security, and Compliance Framework

Snowflake provides a robust, built-in framework for data integrity, privacy, and compliance:

- Access Control: Granular Role-Based Access Control (RBAC) with securable objects, privileges, and hierarchical roles.

- Data Protection: Dynamic Data Masking, Row-Level Security (RLS), and Column-Level Security (CLS) protect sensitive data.

- Encryption: End-to-end encryption (at rest and in transit) is default, with Tri-Secret Secure for enhanced control.

- Compliance: Adherence to major industry regulations and certifications (e.g., GDPR, HIPAA, SOC 2, FedRAMP).

- Snowflake Horizon Catalog: A unified governance solution for metadata management, data lineage, object tagging, and automated classification, transforming data lakes into trusted, compliant assets.

VII. Advanced Analytics and Machine Learning Workflows

Snowflake supports end-to-end ML workflows directly on the platform:

- Real-Time Analytics: Enabled by Snowpipe Streaming for low-latency ingestion and direct analysis of S3 Tables (e.g., Apache Iceberg).

- Snowpark for ML: Allows data scientists to build and deploy ML workflows using Python, Java, Scala directly within Snowflake, “bringing computation to the data” to eliminate movement and enhance governance.

- MLOps Components: Integrated Feature Store (centralized feature management), Model Registry (model versioning and serving), and ML Observability (tracking model performance and data drift) streamline the ML lifecycle.

This positions Snowflake as an “AI Data Cloud,” enabling operationalized AI at scale directly on consolidated data.

VIII. Ecosystem Integration and Data Consolidation Strategies

Snowflake acts as a central data hub, enabling comprehensive data consolidation:

- Integration with Data Sources: Seamlessly connects with cloud object storage (S3, ADLS, GCS), databases, and APIs.

- ETL/ELT Tool Integration: Compatible with leading tools like Fivetran, dbt, Airbyte, and Matillion for robust data pipelines.

- Business Intelligence (BI) Platform Integration: Deep integration with Tableau, Power BI, Qlik, and native Snowsight dashboards for data consumption.

- Snowflake Marketplace: A secure platform for discovering and accessing third-party data and deploying Snowflake Native Apps.

- Data Consolidation: Unifies structured, semi-structured, and unstructured data into a single, governed environment, eliminating silos and creating a “single source of truth.”

IX. Challenges, Considerations, and Optimization

While powerful, Snowflake requires proactive management:

- Cost Management: Consumption-based pricing can lead to unpredictable costs. Optimize with right-sizing virtual warehouses, aggressive auto-suspend, resource monitors, query optimization (e.g., Query Profile, caching), and careful storage/data transfer management (e.g., Time Travel retention, Data Sharing).

- Performance Optimization: Address bottlenecks through query tuning, proper warehouse configuration, and Snowflake features like Automatic Clustering, Search Optimization Service, and Materialized Views.

- Data Quality: Mitigate issues (duplicates, schema drift) with proactive cleansing, automated monitoring, and data enrichment.

Comparative Analysis (Key Differentiators):

- Snowflake vs. Databricks: Snowflake excels in ease of use for SQL users and automatic optimization. Databricks offers more mature capabilities for unstructured data and deep ML lifecycle management (MLflow).

- Snowflake vs. Cloud-Native (AWS Lake Formation, ADLS, BigQuery): Snowflake provides multi-cloud flexibility and a unified platform. Cloud-native services offer deeper integration within their respective ecosystems, potentially lower storage costs for raw data.

- Snowflake vs. Traditional Data Warehouses: Snowflake offers elastic scalability, consumption-based pricing, native semi-structured data support, and “near-zero management,” overcoming the limitations of traditional, rigid systems.

X. Future Outlook and Strategic Roadmap

Snowflake’s roadmap focuses on deepening its “AI Data Cloud” capabilities, enhancing data interoperability, and simplifying the data experience. Key innovations include Snowflake Intelligence (agentic AI for natural language data interaction), Cortex AISQL (AI functions in SQL), Snowflake Openflow (managed multimodal data ingestion), enhanced Apache Iceberg support, and Snowflake Gen2 Warehouses for improved performance. These advancements aim to empower data engineers, simplify modernization, and provide a unified, trusted foundation for AI-driven insights.

Conclusions and Recommendations

Snowflake is a leading cloud data platform, offering unparalleled scalability, performance, and governance for modern data lakes. Its “ZeroOps” approach and quantifiable ROI make a strong business case.

Actionable Recommendations for Principal Architects & Consultants:

- Prioritize a Unified Data Strategy: Leverage Snowflake to consolidate diverse data and eliminate silos.

- Emphasize “ZeroOps”: Reallocate resources from operations to high-value analytics and AI.

- Build Strong Business Cases: Utilize compelling ROI (354% for AI) and efficiency metrics.

- Implement Proactive Cost Management: Establish frameworks for predictable spending.

- Leverage Integrated Governance: Ensure trusted, compliant data lakes with Horizon Catalog.

- Accelerate AI/ML: Use Snowpark for in-platform ML workflows.

- Conduct Tailored Assessments: Compare with competitors based on specific workloads.

- Stay Abreast of Roadmap: Monitor Snowflake’s innovations for future-proofing.