Reading Time: 3 minutes

Reading Time: 3 minutes

Detection‑as‑Code (DaC) is moving from niche practice to mainstream SOC engineering discipline. By embedding detection logic into CI/CD‑style pipelines, organizations are achieving faster deployment cycles, higher detection accuracy, and measurable reductions in false positives.

Industry adoption is accelerating due to three converging factors:

- Operational inefficiency of traditional, manually managed detection rules.

- Threat velocity — adversary TTPs change faster than static rules can be updated.

- Maturity of automation and AI‑assisted validation in SOC workflows.

Current State and Adoption Metrics

- Adoption Rate:

- 2023: <10% of enterprise SOCs had formal DaC pipelines.

- 2025: ~38% adoption in large enterprises; projected to exceed 60% by 2027 (Gartner, SANS).

- Deployment Speed:

- Traditional rule deployment: 2–6 weeks from creation to production.

- DaC pipelines: 1–3 days average, with some achieving same‑day deployment.

- False Positive Reduction:

- Early adopters report 25–40% fewer false positives due to automated pre‑deployment validation and regression testing.

- SOC Efficiency Gains:

- Analyst time spent on rule maintenance reduced by 30–50%.

- Mean Time to Detect (MTTD) improved by 20–35% in mature DaC environments.

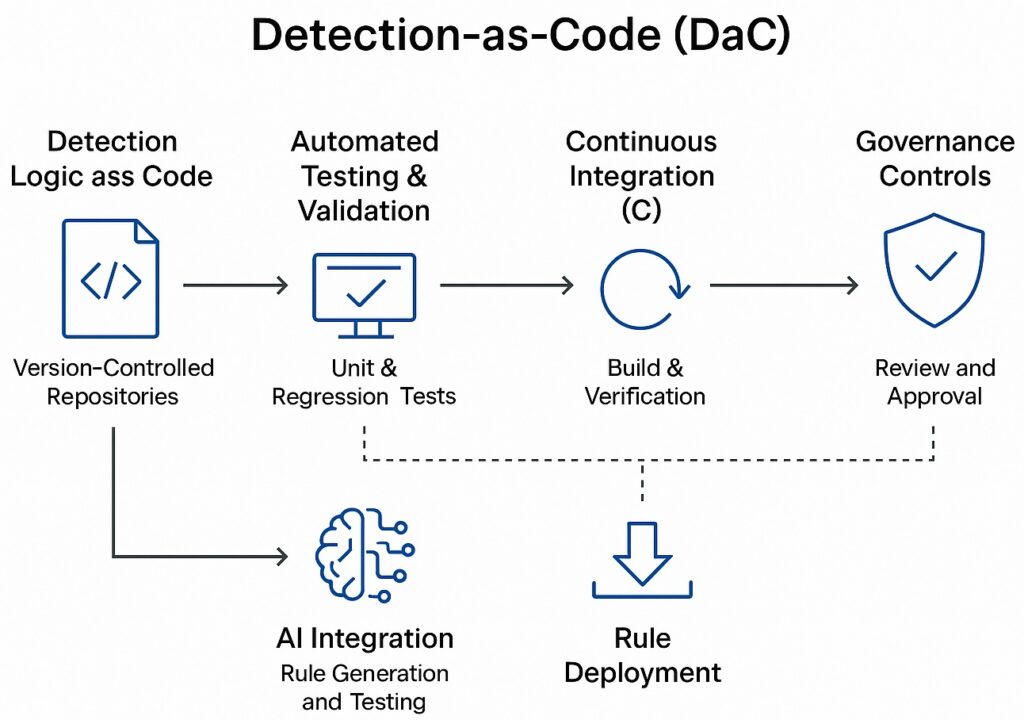

How DaC Works in SOC Pipelines

- Detection Logic as Version‑Controlled Code

- Rules, queries, and correlation logic stored in Git‑style repositories.

- Enables peer review, change tracking, and rollback.

- Automated Testing & Validation

- Unit tests against historical event datasets.

- AI‑assisted simulation of attack patterns to validate detection coverage.

- Continuous Integration (CI)

- Automated builds verify syntax, schema compliance, and performance impact.

- Continuous Deployment (CD)

- Approved rules pushed to SIEM, EDR, NDR, and SOAR platforms automatically.

- Canary deployments used to monitor impact before full rollout.

AI’s Role in DaC

- Rule Generation: AI models trained on threat intel and historical incidents generate initial detection logic.

- Coverage Gap Analysis: AI compares deployed rules against MITRE ATT&CK mappings to identify missing detections.

- Adaptive Tuning: Machine learning adjusts thresholds based on environment‑specific baselines, reducing noise.

- Regression Testing: AI replays historical attack data to ensure new rules don’t break existing detections.

Business Impact

| Metric | Traditional Model | DaC Model (Mature) | Improvement |

| Rule Deployment Cycle | 2–6 weeks | 1–3 days | 85–95% faster |

| False Positive Rate | Baseline | −25% to −40% | Significant |

| Analyst Time on Rule Maintenance | 30–40% workload | 10–20% workload | 50%+ freed capacity |

| MTTD | Baseline | −20% to −35% | Faster detection |

Future Outlook (2025–2028)

- Standardization:

- Expect emergence of open DaC schema standards enabling cross‑platform portability of detection logic.

- Likely alignment with OSSEM (Open Source Security Events Metadata) and Sigma rule formats.

- Integration with CTEM:

- DaC pipelines will feed directly into Continuous Threat Exposure Management dashboards, linking detection coverage to exposure scoring.

- Full AI‑Assisted Pipelines:

- By 2028, >50% of new detection rules in mature SOCs will be AI‑generated and human‑validated before deployment.

- Regulatory Influence:

- Financial and critical infrastructure sectors may see DaC adoption mandated as part of operational resilience requirements.

Risks and Mitigation

- Pipeline Compromise:

- Risk: Malicious code injection into detection logic.

- Mitigation: Code signing, multi‑party approval, and isolated build environments.

- Over‑Automation:

- Risk: Deploying unvetted AI‑generated rules that cause alert floods or miss threats.

- Mitigation: Mandatory human review and staged rollouts.

- Skill Gap:

- Risk: SOC analysts may lack DevOps/CI/CD skills.

- Mitigation: Cross‑training programs and dedicated detection engineering roles.

Strategic Recommendations for the Board

- Mandate DaC Adoption Roadmap — Target full pipeline integration within 18–24 months.

- Invest in Detection Engineering — Create hybrid SOC/DevOps roles to own DaC lifecycle.

- Integrate AI Early — Use AI for coverage analysis and regression testing before full rule generation.

- Measure ROI — Track MTTD, MTTR, false positive rates, and analyst capacity gains quarterly.

- Align with CTEM — Ensure DaC outputs feed into unified exposure management dashboards for board‑level visibility.

Detection‑as‑Code is no longer experimental — it’s becoming a core SOC engineering discipline. The organizations that operationalize DaC now will gain measurable speed, accuracy, and resilience advantages, while those that delay will face widening detection gaps against AI‑accelerated threats.

Chat for Professional Consultancy Services

FREE Consultation – 30 Minutes