Reading Time: 4 minutes

Reading Time: 4 minutes

Status: Final Blueprint

Author: Shahab Al Yamin Chawdhury

Organization: Principal Architect & Consultant Group

Research Date: February 3, 2022

Location: Dhaka, Bangladesh

Version: 1.0

Part I: The Strategic Imperative of Data Integrity

1.0 Defining the Data Integrity Landscape

Data integrity is the assurance of data’s accuracy, completeness, consistency, and reliability throughout its lifecycle. It is the foundation of trust in all data-driven activities.

- Core Concepts:

- Data Integrity: Preserving the original, unaltered state of data.

- Data Quality: The fitness of data for a specific purpose. Integrity is a prerequisite for quality.

- Data Security: Protecting data from unauthorized access. Security enables integrity.

- ALCOA+ Framework: A set of auditable principles for ensuring data integrity: Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available.

2.0 The Enterprise Risk and Impact Calculus

Managing data integrity is a risk management discipline focused on identifying threats and understanding their business impact.

- Threat Landscape:

- Human Error: Accidental deletion, incorrect data entry.

- System Failures: Hardware malfunctions, software bugs, network disruptions.

- Malicious Attacks: Ransomware, hacking, viruses.

- Process Failures: Errors during data transfer, migration, or integration.

- Business Impact Analysis (BIA): Failures impact the entire enterprise.

- Financial: Inaccurate reporting, regulatory fines, remediation costs.

- Operational: Flawed decisions, inefficient processes, supply chain disruption.

- Compliance: Violations of SOX, GDPR, HIPAA leading to legal action.

- Reputational: Loss of customer and market trust.

- Strategic: Failure of AI/ML initiatives, loss of competitive advantage.

Part II: Governance and Operational Blueprint

3.0 Architecting the Data Governance Program

A formal data governance program provides the structure, rules, and accountability for data integrity.

- DAMA-DMBOK: The “what” of data governance. A comprehensive body of knowledge defining data management functions, roles (Data Owner, Steward), and best practices.

- COBIT 2019: The “how” of data governance. An IT control framework that aligns data management with business objectives, providing auditable control processes like APO14 (Managed Data).

4.0 Designing the Data Integrity Operating Model

The operating model defines how the governance program is structured.

- Models:

- Centralized: A single authority defines and enforces all policies. (High control, low flexibility).

- Decentralized: Each business unit manages its own data policies. (High flexibility, risk of inconsistency).

- Federated (Hybrid): A central body sets enterprise standards, while business units implement and manage them locally. This is the most common and scalable model for large organizations.

5.0 Establishing Roles, Responsibilities, and Ownership

Clear roles are critical for execution and accountability.

- Key Roles:

- Executive Sponsor/CDO: Provides strategic direction and resources.

- Data Governance Council: Cross-functional body for strategic decisions and policy approval.

- Data Owner: A senior business leader accountable for a specific data domain.

- Data Steward: A business subject matter expert responsible for day-to-day data management.

- Data Custodian (IT): Manages the technical infrastructure and security controls.

- RACI Matrix: A crucial tool to define who is Responsible, Accountable, Consulted, and Informed for all data integrity processes, eliminating ambiguity.

Part III: Implementation and Control Framework

6.0 Managing Integrity Across the Data Lifecycle

Controls must be applied at every stage of the data’s journey.

- Lifecycle Stages: Create/Collect → Store/Process → Use/Share → Archive → Destroy.

- Stage-Specific Controls:

- Creation: Input validation, standardization.

- Storage/Processing: Access controls, encryption, data lineage.

- Usage: Master Data Management (MDM), metadata catalogs.

- Archiving: Retention policies, media refreshment.

- Destruction: Secure deletion procedures, certificates of destruction.

8.0 Designing and Implementing Data Integrity Controls

A layered framework of controls is the most effective defense.

- Control Framework:

- Preventive: Stop errors from occurring (e.g., access controls, input validation).

- Detective: Identify errors after they occur (e.g., audit trails, data quality monitoring).

- Corrective: Remediate detected errors (e.g., backup and recovery procedures).

9.0 Navigating Regulatory Compliance

Data integrity is a legal mandate for many industries.

- SOX: Requires internal controls to ensure the accuracy of financial data.

- GDPR: Mandates principles of “Accuracy” and “Integrity and Confidentiality” for personal data.

- HIPAA: The Security Rule requires safeguards to protect health information from improper alteration or destruction.

Part IV: Technology, Measurement, and Continuous Improvement

10.0 The Technology and Platform Ecosystem

Technology enables and enforces the data integrity program.

- Data Lineage: Tools that trace data from its origin to its destination, enabling root cause analysis of errors and impact analysis of changes.

- Data Observability: Proactive platforms (e.g., Monte Carlo, Acceldata) that use ML to monitor data health across freshness, volume, schema, and quality, detecting “unknown unknowns.”

11.0 Measuring Performance: KPIs and Dashboards

A program cannot be managed if it is not measured.

- Key Performance Indicators (KPIs):

- Data Quality: Accuracy Rate, Completeness Rate, Consistency Rate.

- Process Efficiency: Time to Detect, Time to Resolve.

- Program Adoption: Percentage of critical data under governance.

- Dashboards: Must be tailored to the audience (executive vs. analyst), tell a clear story, and provide actionable, drill-down insights.

12.0 The Data Integrity Maturity Model and Strategic Roadmap

Data integrity is a journey of continuous improvement.

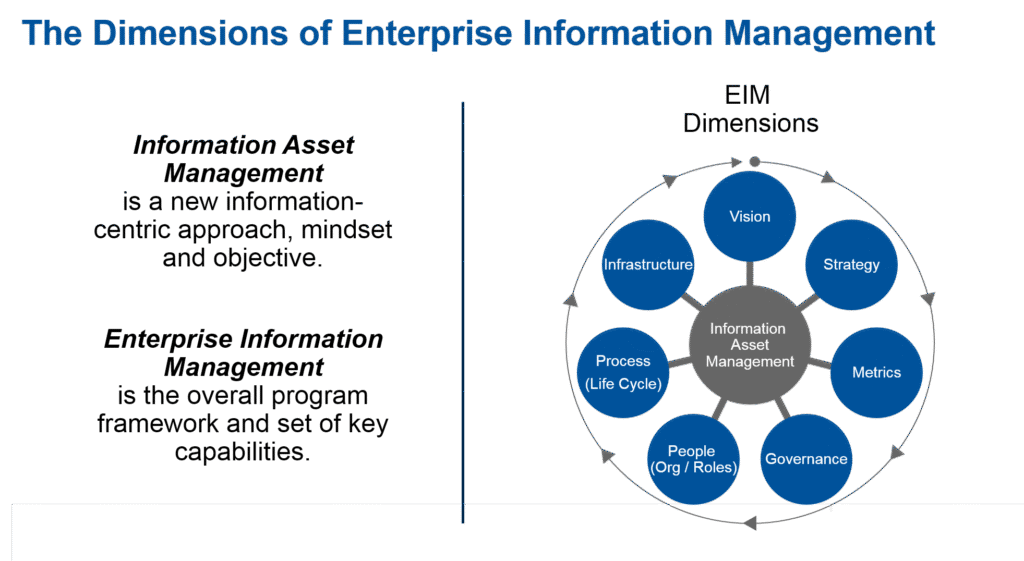

- Maturity Assessment: Use frameworks like CMMI or Gartner’s EIM model to benchmark current capabilities across levels (e.g., Performed, Managed, Defined, Measured, Optimized).

- Strategic Roadmap: A multi-year plan based on the maturity assessment that outlines a phased approach (Foundational → Expansion → Optimization) to advance the program.

13.0 Fostering a Culture of Integrity through Data Literacy

The human element is paramount. A data literacy program builds the organization’s ability to read, understand, and communicate data as information, transforming data integrity into a shared responsibility.

Part V: Advanced Topics and Future Outlook

14.0 Overcoming Implementation Challenges

- Common Pitfalls: Lack of business buy-in, cultural resistance, data silos, legacy systems, and failure to demonstrate business value.

- Mitigation: Secure executive sponsorship, start small to deliver incremental value, focus on change management, and measure and market success.

15.0 The Future of Data Integrity: AI, ML, and Automation

AI and Machine Learning are transforming data integrity from a reactive to a proactive and automated discipline.

- Impact of AI/ML:

- Automated Anomaly Detection: ML algorithms learn normal data patterns and flag deviations in real-time.

- Predictive Data Quality: Models can predict potential quality issues before they occur.

- Automated Data Cleansing: AI can learn to identify and merge duplicates or suggest corrections.

- Intelligent Data Classification: ML can automatically identify sensitive data and apply appropriate governance policies.

Chat for Professional Services